Deep Learning techniques for advanced image processing in manufacturing processes

In the sixth instalment of the exclusive insights and expert opinions from the openZDM project partners series, we are delighted to present Arantza Bereciartua, Senior Researcher and Project Manager from TECNALIA Research & Innovation.

The introduction of digital tools that allow the automation of tasks in manufacturing processes is a big challenge and non-trivial issue. The benefits are clear and diverse. Therefore, digitalization is on-going in many industrial manufacturing processes but there is still a long way ahead. Some of the processes that are often automatized are those ones that try to reproduce visual assessments in an objective and repetitive way at high speed with accuracy and over 100% of production.

At the same time, image processing and deep learning techniques have experienced a good acceptance in the last years since they have enabled to tackle some of these challenges. In the literature, many applications based on computer vision can be found to solve different problems in different fields of application, such as health, economics, agriculture, entertainment and, obviously, industry.

The concept of Deep Learning

Deep learning has emerged in the field of computer vision by revolutionizing the way we process and understand the images. When compared to classical image processing techniques, deep learning models consistently demonstrate superior performance in various tasks. Here is an overview of how deep learning has outperformed classical image processing methods:

- Feature Extraction and Representation:

– Classical Image Processing: Traditional methods rely on handcrafted features and filters to represent and analyze images. Engineers design these features based on their understanding of the problem, which can be limiting when dealing with complex, diverse data. Robust extraction of features can be hard and prone to errors.

– Deep Learning: Deep neural networks automatically learn hierarchical representations from raw pixel data. Convolutional Neural Networks (CNNs), used in particular for image processing, can capture and learn complex patterns and features within images, adapting to the data without manual feature engineering. This makes the process more robust and trustworthy.

- Scalability:

– Classical Image Processing: Traditional techniques often struggle with scalability. Designing and fine-tuning feature extractors for different tasks and datasets can be time-consuming. Domain adaptation due to changes in illumination conditions or other variations can be very difficult and may require complete review of the algorithms for feature extraction.

– Deep Learning: Deep learning models can scale efficiently. Once trained on a large and diverse dataset, they can generalize quite well reducing the need for extensive feature engineering and adaptation.

- Object recognition:

– Classical Image Processing: Classic methods face challenges in object recognition when dealing with variations in scale, lighting, and pose. They often require laborious pre-processing steps and may fail with complex scenes.

– Deep Learning: Deep neural networks have demonstrated remarkable object recognition capabilities, even in challenging conditions. Models like ConvNets, Region-based CNNs, and Transformer-based architectures can handle a wide range of object recognition tasks, surpassing classical methods in accuracy.

- Semantic segmentation and object detection:

– Classical Image Processing: Classical methods for semantic segmentation or objects detection, such as thresholding and contour-based techniques to extract handcrafted features, fail with complex scenes and fine-grained object detection. They can be less robust and accurate, especially when handling object occlusion and variation.

– Deep Learning: Deep learning models have significantly improved semantic segmentation accuracy. Fully Convolutional Networks (FCNs) and U-Net architectures, for example, can perform excellent in segmenting objects in images, making them crucial for applications like medical image analysis, autonomous driving, or defects detection in industrial applications. Deep learning-based object detectors, like Faster R-CNN, YOLO (You Only Look Once), and SSD (Single Shot MultiBox Detector), have set new benchmarks in object detection accuracy, offering real-time performance and robustness to diverse scenarios.

- Transfer learning:

– Deep Learning: Deep learning models benefit from transfer learning, where pre-trained networks can be fine-tuned on specific tasks with limited data. This significantly reduces the data requirements and accelerates model development; a capability classical image processing techniques lack completely.

In summary, deep learning has surpassed classical image processing techniques in most applications and has become the foundation for a wide range of cutting-edge applications in computer vision.

Solutions integrated with the openZDM Platform

In openZDM project, a platform to contribute to Zero Defect Manufacturing is being built with the aim of managing non-destructive technologies installed in-line for inspecting 100% of the products. openZDM platform also aims at gathering the extracted information by means of advanced algorithms. Vision based non-destructive technologies are used to detect defects or anomalies and to estimate some values and features of the manufactured elements.

Example: Glass bottles manufacturing

One of the use cases tackled in openZDM project is focused on proper manufacturing of glass bottles. Manufacturing of glass bottles is already a quite automatized process that still presents some challenges ahead. Glass bottles manufacturing companies often produce glass bottles of different colours, shapes and diameters. The thickness of the bottle is a critical quality issue. If the thickness in specific regions of the bottle is not correct, the bottle might break (due to the pressure the liquid will apply, or when the bottle hits a surface or another bottle in the filling line, for example). To guarantee the quality and resistance of the bottle is a critical issue.

The non-destructive inspection systems in this use case have a double objective:

- To estimate the thickness of the bottle with high precision as soon as the bottle is manufactured.

- To identify, control and tune the variables of the process that leads to optimal thickness in the bottle.

The role of NDI systems

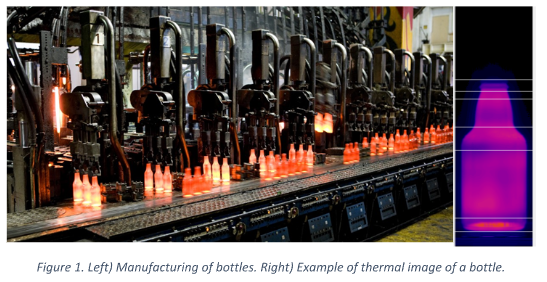

One Non-Destructive Inspection (NDI) system is dedicated to estimate the bottle wall thickness distribution by means of thermographic images. The thickness of the bottle is currently measured by sensors in the cold end of the line around 45-70 minutes after the manufacturing of the bottles. The existing gap is too big, and therefore, the possibility of reaction to problems is often limited. Illustration of the moment the bottles are manufactured is shown next together with a thermographic image.

An expert system based on image processing and deep learning is needed to extract the valuable information from the thermal images and link that information with other variables of the process in order to estimate the thickness of the bottle.

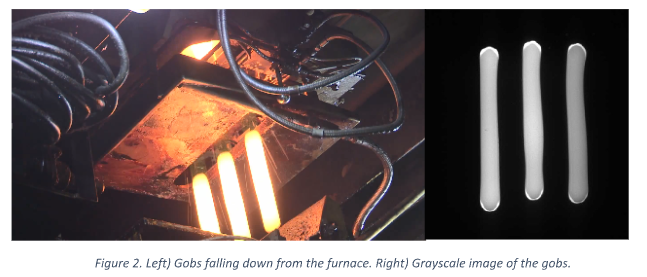

At the same time, the experts of the glass manufacturing process know that the thickness of the bottle is influenced by the gob quality. The gobs fill the molds to become the bottle that will be further inspected by the thermographic camera. This bottle will be later inspected by the sensors that measure its thickness in different points. The length, the speed, the orientation and other possible features affect how the gob is molded and in consequence, the

final characteristics of the bottle. The analysis of the gob quality and its relationships with the thermographic image of the bottle it generates, and with the final thickness values can help to understand better the process and to guarantee the good thickness values in the bottles.

From a classical image processing point of view, ad-hoc algorithms would have to be designed and developed to extract as many meaningful features as possible over the gobs. The values of the features of shape may present subtle differences, and the estimation of the thresholds that discriminate good and bad bottles is a challenge and probably the parameters of the algorithm for feature extraction have to be tuned depending on the bottle reference or other variables of the process.

Usually, in the classical machine learning approach, after the extraction of handcrafted features a classifier is trained (Support Vector Machine, Random Forest, K Nearest Neighborhood, etc.) that will have the feature vector as input and will infer the quality of the bottle in terms of thickness as output.

However, this approach is highly dependent on parameters and conditions. Therefore, next stage to be tackled is AI based solution, a deep learning-based model can be developed to link gob quality and values of bottle thickness.

Summary

Let’s see whether this deep learning based solution is capable of solving in an efficient and accurate way this challenging use case in manufacturing.